AUSNOG, the Australian Network Operator’s Group meetings, have always managed to assemble a program that represents a fascinating window into the diversity of activities in the Australian communications environment. This year the program included the use of femtocells raised to a useful height by tethered drones to provide fast response to areas affected by natural disasters, the construction of radio links in remote locations and the fascinating use of fibre optic systems as acoustic microphones. Here’s my thoughts in response to a presentation by Ciena’s Pradap Rajagopal on the evolution of PON access networks.

The evolution of wired access networks for suburban reticulation has been driven by a special set of economic and technical circumstances. In general, consumers are resistant to paying a very high fee to have their residence connected to a high-speed access data network.

To illustrate these issues, a summary of the costs in the US some 5 years ago noted that it was costing the network constructor some USD $1,000 (on average) to pass each urban or suburban house on average, and if the house elected to connect to the service, then another $800 would be spent on the tail line to complete the connection. All this was on a par with the experience of many other FTTH deployments. While the government-funded National Broadband Network (NBN) in Australia used mandatory conversion of every suburban home to this NBN service, in most other environments it’s an optional decision by the consumer. In the American context, the take up of high-speed broadband is a consumer choice, and less than 40% of households were taking up the option. This means that each connected service has to cover not only the $800 tie line plus the $1,000 per house network costs of “passing” the house with FTTH, but, assuming that this service is deployed in a revenue neutral or even positive manner, the total revenue from all households that opt to take the service needs to cover the total network deployment costs, effectively including those costs of passing the “missed” houses that do not take up the service. This implies that the “miss rate” has a big impact on service costs. For example, with an average per passed-house cost of $1000, and a “miss” rate of 40%, instead of costing some $1,800 per household that would result from a 100% service take-up rate, each connected service costs some $3,300 per household. That’s a premium of 83% to cover the costs that result from partial take-up of the service. In many local markets, the alternatives to FTTH, namely DOCSIS, xDSL and even 4G/5G services, look a whole lot cheaper, to both the network operator and to the consumer.

The outcome of this situation is that the period needed to generate a positive return on the capital invested in the physical infrastructure of the wired access network may take many years. It’s no surprise that the copper pair telephone access network had such a long service life. There was just no economic motivation to upgrade this network, and, on the whole, it was cheaper and easier for the network operator to deploy equipment that improved the digital signal processing capabilities at both ends of the access network than it was to perform any broad upgrade of the wire infrastructure. The shift to DSL technology and the simultaneous deployment of high-speed mobile services also meant that the margins of revenue opportunity for a new generation of access technology, name optical fibre cable were small enough to present some significant challengers to both public authorities and network operators.

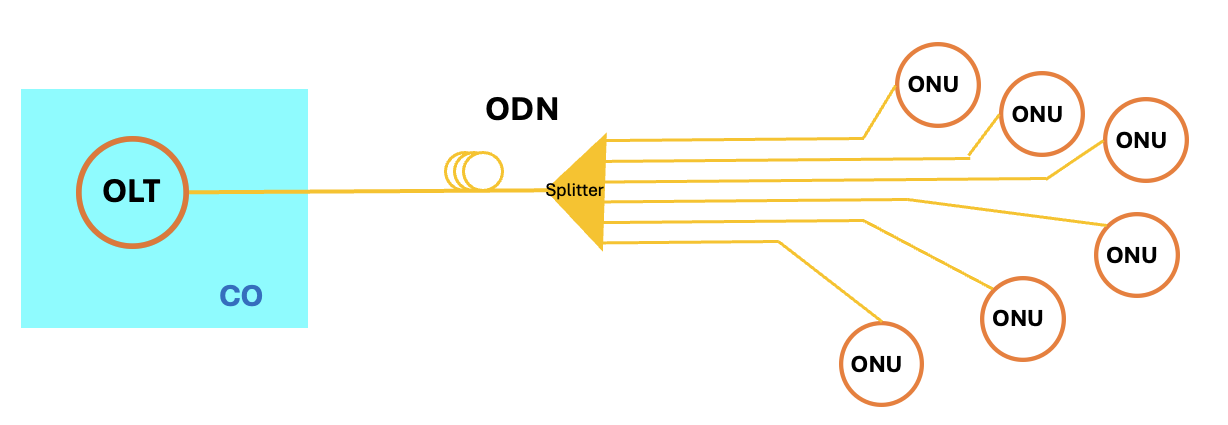

While each copper pair subscriber was provisioned with their own discrete physical copper pair from their location to the network’s access points (or Central Office), this approach was not feasible for a fibre-based access network. The commonly adopted solution was to use a form of shared infrastructure that shares a common fibre run from the Optical Line Terminal (OLT) located in the Central Office (CO) to an optical splitter, that then sends the same optical signal to each of the attached Optical network termination Units (ONUs). This system is entirely passive in that the signal splitting (and combining in the upstream direction) is performed at the optical level and there is no active electronics within the network. This is a “PON” or Passive Optical Network (Figure 1).

Figure 1 – PON Network Components

OLTs in PON networks typically support between splitting the single optical feed to between 16 and 32 ONUs, and in certain cases up to 256 ONUs. The point-to-multipoint topology of PON requires different modes for downstream (OLT to ONU) and upstream (ONU to OLT) transmission. In the case of downstream transmission, typically all ONUs receive the same optical signal from the OLT, allowing the OLT to broadcast in continuous mode and requires the equipment attached to each ONU to filter out all data frames that were not directed to that particular ONU.

For upstream transmission the ONUs cannot all broadcast in continuous mode simultaneously. Instead, the ONUs transmit upstream signals in burst mode and share the upstream channel using time-division multiplexing through a dynamic bandwidth allocation process. For a TDM PON, each ONU is assigned specific timeslots to transmit a burst of upstream data, and at any given time, the OLT is only receiving signals from a designated ONU. Because of the potential varying distance between the OLT and the ONUs and the random phase of the burst mode packets received by the OLT, the phase and amplitude variation in the PON network needs to be compensated by adopting clock and data recovery.

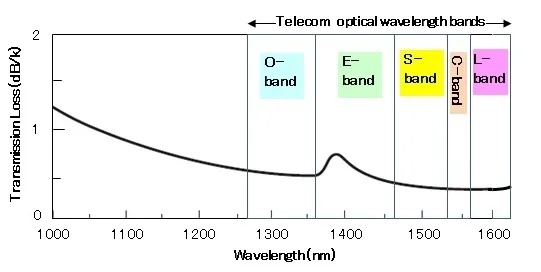

The first generation of PON technology, BPON (the “B” was for “Broadband), specified by ITU-T G.983, and used a 622Mbps shared downstream data rate and a 155Mbps upstream rate. The signal was encoded using on/off keying and used 1530nm (C-band) for the downstream signal and 1410nm (L-band) for the upstream signal (the nomenclature for these optical bands in shown in Figure 2).

There has been a steady stream of improvements in PON networks. There has been no change to the optical components, namely the fibre cables and passive optical splitters, but changes in the optical wavelengths and in the optical carrier signal.

In 2003 GPON (ITU-T G.984.x) was released, which defined a 2.5Gbps downstream capacity at 1490nm and 1.25Gbps upstream using 1310nm lasers. The framing of the signal used ATM, extending the conventional framing format using in broadband DSL systems to these PON systems. Soon after, in 2004 the IEEE published a specification for EPON, IEEE 802.3ah, which was a 1.25Gbps symmetric system using Ethernet framing. EPON has weaker levels of error correction and has lower split ratios, but the uses 1310nm optics, and this represented a significant cost efficiency over the 1530nm optics used by GPON at the time.

The higher speeds in these two systems relied in advances in Digital Signal Processing (DSP) capabilities, due to the inexorable work of Moore’s law in providing higher processing capability at lower cost per gate. These advances in signal processing bought on by continual refinement of the chip production process was the underpinning factor in the general observation at the time that capacity improvements in access networks increased by a factor of 100 across 20-year intervals.

Some four years later, around 2008, the IEEE and ITU-T both defined their 10Gbit/s PON solutions, namely IEEE 802.3av, 10GE-EPON, and ITU-T XG-PON, G.987.x, respectively. 10G-EPON provides symmetric (10 Gbit/s downlink and uplink) and asymmetric (10 Gbit/s downlink and 1 Gbit/s uplink) connections. The optical properties of these 10G systems were the same as the earlier 1G systems, namely a 28db maximum loss in the 1310nm and 1500mm bands. The fundamental difference between 10G-EPON and XG-PON is that XG-PON is a transport technology for Ethernet as well as TDM and ATM.

At this juncture it may be useful to pause for a second and consider what a 10G PON system actually means. The total capacity in the cluster of ONUs served by a single OLT is 10G. If each of the 32 ONUs that are fed by this OLT are receiving at “full speed,” then while each ONU would be receiving at a 10Gbps total rate, the data feed that is directed to each individual ONU is some 312Mbit/s. If a single ONU is the only active ONU in this network, then the dynamic allocation behaviour of the OLT would assign the entire capacity to this active ONU, and the received data feed would be 10Gbit/s. There are differences between the shared data clocking rate, the average clocking rate under “normal” load, and the minimum data clocking rate when all PON clients are equally active at the same time, and they relate to the splitter ratio used in the PON and the mix of client behaviours that are served by the PON.

These speeds of up to 10Gbit/s have been achieved over fibre systems using simple On/Off Keying (OOK) signal modulation. Some years ago, the point-to-point (P2P) fibre world turned to use coherent optics to obtain higher speeds. Coherent Optics uses polarisation and phase and amplitude modulation to increase the number of bits that are represented by each transmitted symbol. Using a basic optical system that these days can support the transmission and reception of 25G symbols per second and using a Quadrature Phase Shift Keying systems to encode 2 bits per symbol, and also by using 2 polarisations, the system can support a data rate of some 100Gbit/s (4x25Gbit/s). The same coherent optical techniques can be used in the context of PON systems, as the passive splitter does not alter either the phase or the polarisation of the light signal. With shorter lengths (up to 20km in general) and lower signal distortion levels, PON solutions that utilize coherent optics for access networks have a better signal-to-noise ratio, which allows for higher modulation orders than other technologies, and thereby achieve high capacity in the PON network.

In 2023 CableLabs issued the architectural specification of Coherent PON networks using 100G single wavelength capacity (CPON). CPON supports an extended optical power budget and higher spectral efficiency, which for new PON builds implies a capability for greater splitter ratios or extending the reach the PON network from around 25km to up to 80km. It’s not just 100G PON systems, as a rather enthusiastic CableLabs blog post reports: “As consumer appetite for bandwidth continues to grow, CPON will prove a scalable and extensible technology for fiber networks for the next 30 years.” Considering the extent of the changes of the last 30 years that’s a pretty mighty claim!

Where does it go from here? The 400Gbit/s P2P optical systems have been around for some years now, and it looks like these systems will be heading into the P2M PON environments in the very near future. New PON rollouts can take advantage of coherent optics to support greater numbers of clients per OLT through the use of higher splitter levels and also longer maximum reach from the OLT to the ONUs. However, it’s not just new PON deployments. One of the key requirements is to be able to upgrade existing PON networks by changing the optical drivers and receivers at the OLTs and ONUs, leaving the entire passive optical infrastructure unchanged. In such scenarios of upgrading existing infrastructure, the result is an increase in the capability of these systems per client, and not necessarily an increase in the scalability of the PON network to service more clients over the same infrastructure.

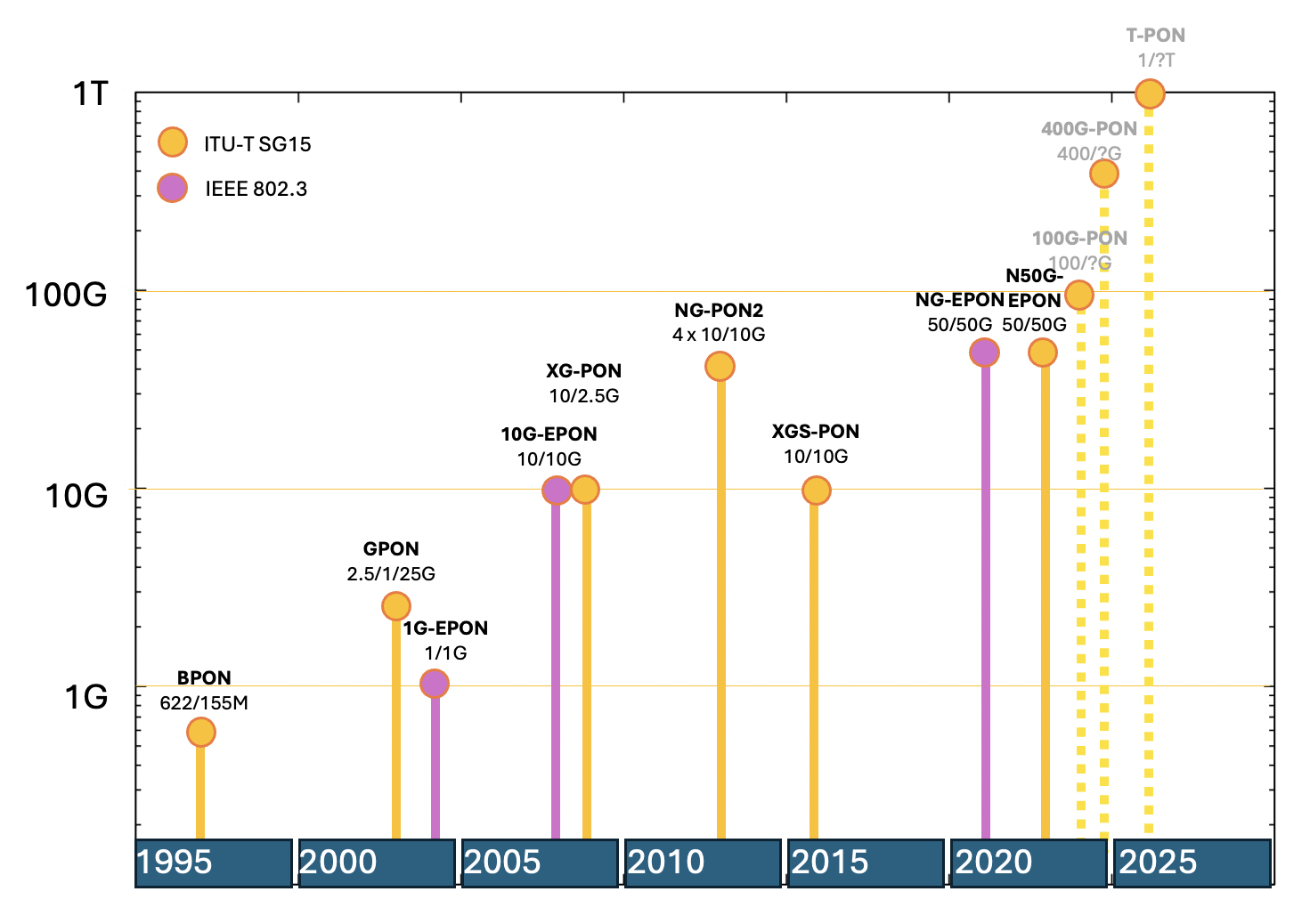

With Coherent PON and digital signal processing capability that supports between 32 and 64 discrete encoding points in the phase, amplitude and polarisation space it is not unreasonable to contemplate 400G, 800G and even Terrabit per second PON networks. Technically, that’s a stunning achievement! The evolutionary path of PON capacity over the past few decades is shown in Figure 3.

There are a number of tempering thoughts about future PON evolution, particularly in relation to the unbounded future 30-year vision espoused by Cable Labs. One such thought is that it is unlikely that a residential user of an access PON network would pay a significant price premium over a 100G PON service for such a high-speed service, at least in the near-term future. While Terrabit per second PON access networks certainly look to be feasible from a technology perspective, working out if such very high-speed systems have an associated use profile and value proposition that can support an economically feasible deployment model for network operators currently falls in the realm of unfinished work. Secondly there are very real issues with the continued functioning of Moore’s Law in silicon chips. So far we’ve been able to reduce the width of the planar tracks in silicon dyes and maintain the same production cost and yield per chip. The cost per gate falls and the unit cost of processing falls. The implication is that for a constant cost (or even a declining cost) the evolution of digital signal processors that support ever higher sensitivity and increasingly crowded discrete encoding points in phase amplitude signal modulation can be sustained. With track widths heading to sub 3nm it’s not clear how we can keep Moore’s Law functioning with planar integrated circuits, and it is a distinct possibility that we are getting to the end of this 60 year-long adventure with integrated circuitry etched into silicon chips and Moore’s Law.

I don’t know about you, but between these issues with technology and economics I’m certainly not holding my breath for a Terrabit optical service delivered directly to my suburban residence. I just don’t think that it’s going to happen in my wired internet access service for many years to come, if at all!