In the coming weeks another Regional Internet Registry will reach into its inventory of available IPv4 addresses to hand out and it will find that there is nothing left. This is by no means a surprise, and the depletion of IPv4 addresses in the Internet could be seen as one of the longest slow motion train wrecks in history. The IANA exhausted its remaining pool of unallocated IPv4 addresses over four years ago in early 2011, and since then we’ve seen the exhaustion of the address pools in the Asia Pacific region in April 2011, in the European and the Middle Eastern region in September 2012, in Latin America and the Caribbean in May 2014 and now it’s the turn of ARIN, the RIR serving the North American region. As of mid June 2015 ARIN has 2.2 million addresses left in its available pool, and at the current allocation rate it will take around 30 days to run though this remaining pool.

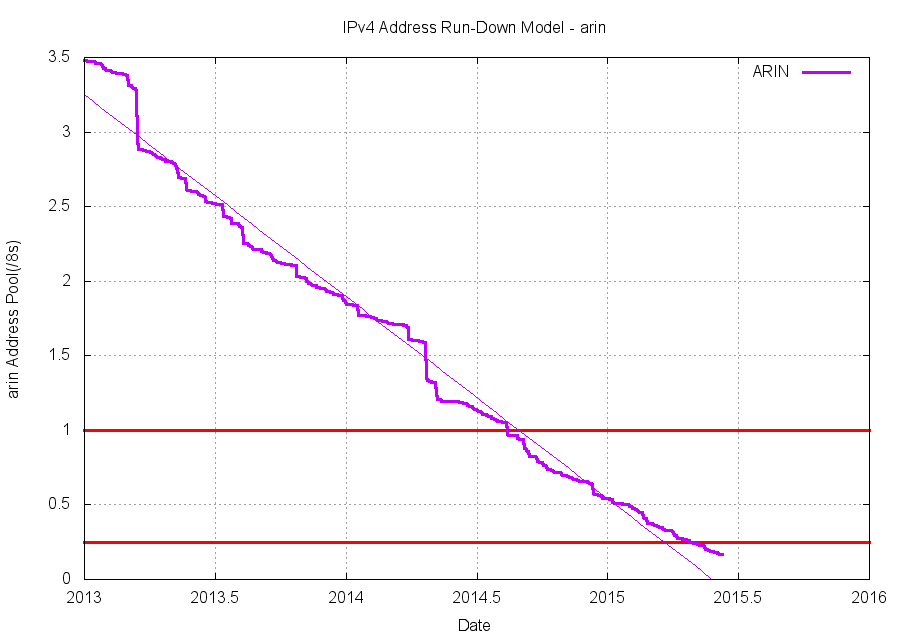

Figure 1 shows the allocations from ARIN’s address pool over the past 2 1/2 years to get to where they are today.

Figure 1 – ARIN IPv4 Allocations

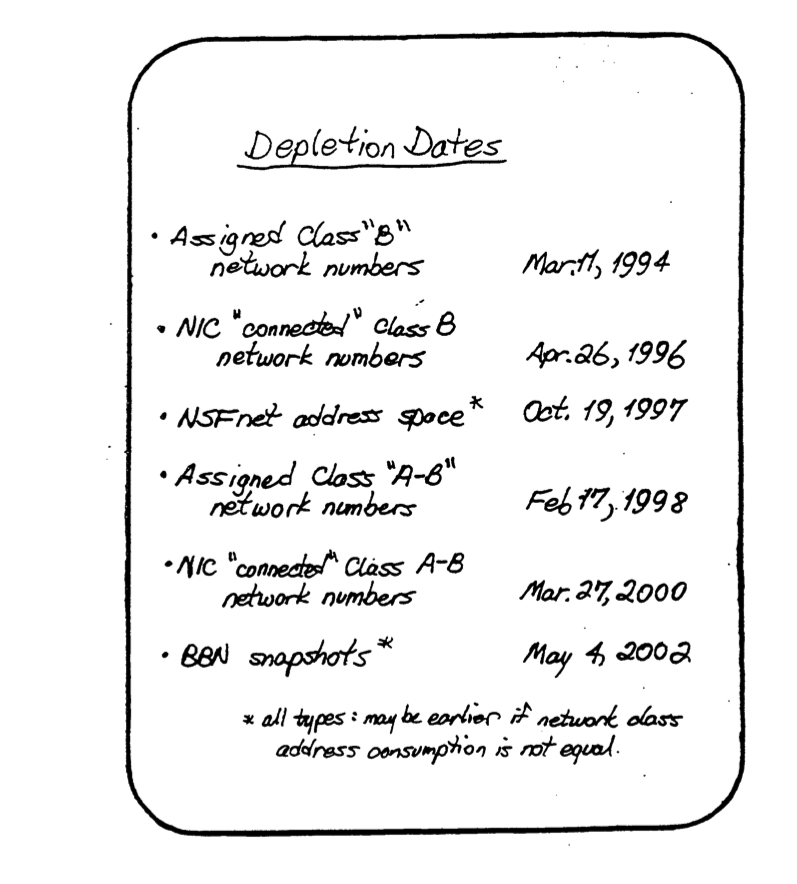

Not only is this a protracted calamity for the Internet, it is also one of the oldest predictions in the brief history of the Internet. Back in 1989, even when the Internet was still being portrayed as an experiment in packet switched networks, predictions were being made of address depletion. Figure 2 shows a prediction made at the IETF meeting in August 1990 by Frank Solensky on the likely dates of IPv4 address depletion.

Figure 2 – Address Depletion – August 1989

Two responses were formulated for this impending disaster. A couple of short term mitigations, and a long term answer. On of the short term measures was an approach in sharing IP addresses, which was intended to buy a small amount of time to assist in the preparation of the longer term response, namely a new Internet Protocol that could uniquely address a vastly larger Internet. The short term measure, Network Address Translation, or NATs, has proved to be unbelievably successful.

NATs appear to have been a runaway success that is apparently disowned by it’s inventor Paul Francis. In a recent article in ACM SIGCOMM Paul writes:

“Given that the problem that I was trying to solve, and the problem that PIX solved, are different, there is in fact no reason to think that John Mayes or Kjeld Evgang got the idea for NAT from [my] CCR paper.

“So what would the lesson learned for researchers today be? This: solve an interesting problem with no business model;, publish a paper about it, and hope that somebody uses the same idea to solve some problem that does have a business model. Clearly not a very interesting lesson.”

Current estimates have some 10 billion devices attached to the Internet, yet the address space only spans some 4 billion addresses and we believe that between 1 to 2 billion of these addresses are in use. There is a huge amount of address sharing going on, and all of this is via NATs. We’ve stretched out the life of IPv4 into some strange and completely unanticipated afterlife. We had thought, naively as it turns out, that we would never hand out the last IPv4 address. We thought that the pressures of address depletion would impel the industry to take up IPv6. Instead we have built an internet that is around 10 times larger than the pool of addresses it uses. And as the internet grows, the pace of NAT use grows. For some time over the past decade it was looking like we have turned out collective backs on IPv6 and were searching for ways to cram ever more users into the IPv4 network.

But address sharing makes some things hard, and other things impossible. It’s impossible to call you back if you are behind a NAT. Its possible for us to call each other if both of us are behind NATs. NATs work in an asymmetric world of servers and clients. If we want any other model, such as true peer-to-peer models of communications and services, then we need to enlist brokers and intermediaries to try and force the NATs to behave in ways that are unnatural. NATs only support TCP and UDP. If you find DCCP, or SCTP, of interest then, no, that’s not going to work. Even innovations such as Multipath TCP are living on the edge, hoping that the NAT won’t clobber TCP options. And some of the more interesting efforts to experiment with flow control systems are forced into UDP because if its not TCP then it has to be UDP! So we see Google’s QUIC living on the edge of acceptability, hoping that the NATs will keep the UDP bindings alive for at least 30 seconds of idle time and will not forcibly reclaim the bindings after a set time limit. If was want to push the network back into the role of a simple packet pushing operation then we need to remove this dependency of network-based address transforms. And the only approach on the table that can achieve this is IPv6.

How are we going with IPv6? When can we contemplate throwing away IPv4?

At APNIC we use a sampling technique to measure the proportion of users who can retrieve an object using IPv6. Initially, the results were less than inspiring, and the relative proportion of IPv6 capable users was stubbornly below 1% of the total user population. The problem was that very few Internet access service providers added IPv6 into their portfolio of delivered service. And while the proportion of IPv6 users remained at such a low level very few access providers felt any business imperative change their existing offering.

In the wired network the incremental costs of rolling out IPv6 have not been reported to be onerous by those who have ventured into this area. With some care and attention to detail the incremental effort of adding an additional protocol can be managed to a level where the costs are largely absorbed within the network provider’s normal operating budgets.

The mobile story is a little different in the 3G world. A conventional leasing financial model for 3G gateway equipment, where the operator pays the gateway vendor for “connected minutesâ€, results in the outcome that a dual protocol connection is supported as two distinct connections, so the “connected minute” meter is running twice as quickly! Perhaps this financial model explained the reticence on the part of the mobile industry for many years. Things are changing in the world of mobile servers and IPv6. 4G networks use an all-IP infrastructure without internal virtual circuits. This has drastically reduced the marginal costs to network operators of running dual stack in 4G networks, and today we providers, such as Verizon in the US, who are offering native dual stack in their 4G platform. Other operators are taking a hard look at 464-XLAT. Given that the end objective is an all-IPv6 network, and the dual stack phase is purely temporary, then does it make sense to assume the end point, and build an all-IPv6 carrier network for mobile platforms and while there is still a need for IPv4 just support IPv4 as an overlay? T-Mobile US think so, and this approach lies at the core of their IPv6 mobile service.

I suppose there is one further factor that cannot be ignored: the network effect. In a distributed network the level of diversity across providers is a tension between the dictates of interoperability, ensuring that anyone can communicate with anyone else, and the desire to innovate in one’s service offering to differentiate the offering from one’s competitors. If a provider goes too far out from the pack then they imperil interoperability with all other providers and devalue their offering. The result is a herd-like behavior which tends to be highly conservative approach to innovation, where the desire to do what everyone does often overwhelms the desire to innovate and be different. But who defines “what everyone else does”? Where are the influence points that propel common change? Who determines the difference between individual aberrant activities and instances of trend setting for subsequent universal adoption? One potential answer here is that its all about economics and recent history. In Internet influence terms the United States is still the “core” of the Internet. It may not be the traffic core, or the routing core. But when we look at the profile of technologies that defines today’s Internet – the “pack” consensus of the networked effect – then this industry appears to look to the United States for their lead. We’ve been running out of IPv4 addresses for the past four years. First in Asia and the Pacific, then in Europe and the Middle East, then Latin America. During all this period the United States still had IPv4 addresses. Access to further IPv4 addresses has got tougher in the US over this period, but there was still the perception that the US had not yet run out. All this is changing right now. In the next month or so ARIN will hand out its last IPv4 address. Address exhaustion is no longer someone else’s problem. Its a problem in the United States as well.

I suspect that this is at the heart of the motivation behind some of the behemoths in the US Internet access business, and that has influenced the overall picture of IPv6 adoption.

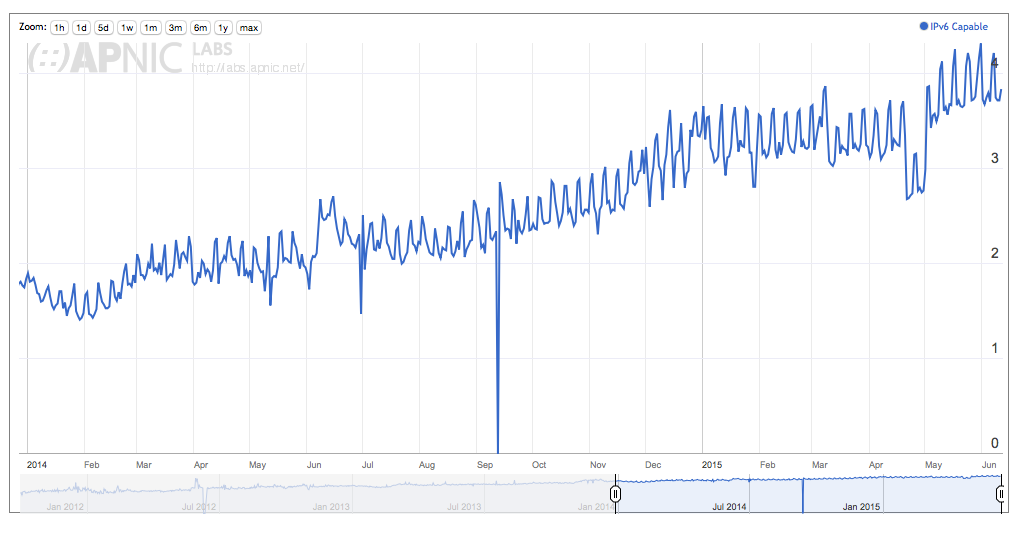

The global rate of IPv6 adoption since the start of 2014 is shown in Figure 3.

Figure 3 – Proportion of IPv6 Users – January 2014 – June 2015

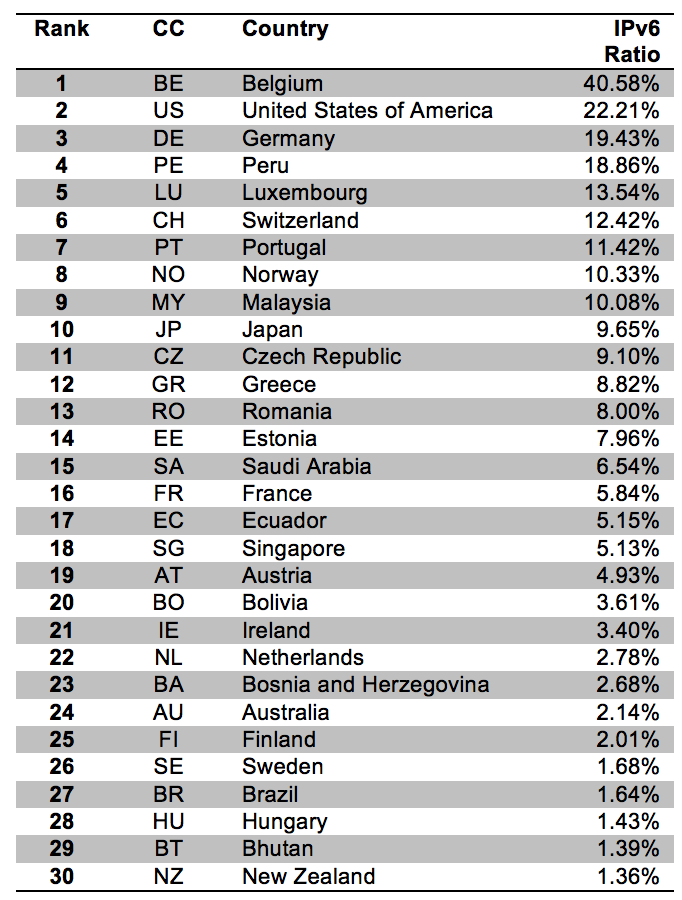

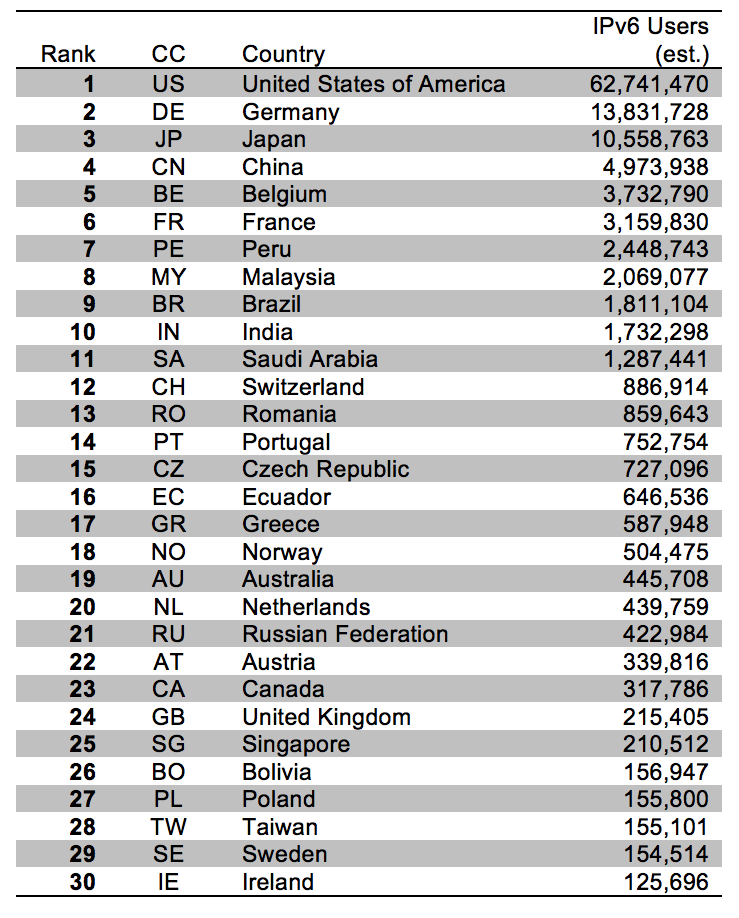

The country ranking of the top 30 countries who are above 1% with IPv6 deployment in shown in Figure 4.

Figure 4 – Top 30 countries – IPv6 deployment

The IPv6 picture in Belgium is impressive, where almost one half of the users in Belgium are now IPv6 capable. Similarly, the picture in the United States appears to be radically different from that of a year ago, with almost one quarter of US users now on IPv6. Today some 30 countries now have IPv6 deployment rates in excess of 1%. But if we are looking for points of industry influence and the extent to which IPv6 is amassing a network effect then perhaps we can get a clearer view of IPv6 deployment by estimating the populations of IPv6 users, and see where they are. Figure 5 also shows the top 30 countries, but this time the ranking is based on the estimated population of IPv6 users in each country.

Figure 5 – Top 30 countries – IPv6 Users

The full extent of the recent moves in the United States by Comcast, Verizon, T-Mobile, AT&T and Time Warner Cable in IPv6 are very impressive. When coupled with the efforts in Germany by Deutsche Telekom and Kabel Deutschland and KDDI in Japan then the IPv6 results in these top three IPv6 countries outnumber all the others.

But I’m not sure if this table provides a useful insight into industry influence and IPv6. To what extent do the actions in the access markets in Bolivia or Romania influence decisions in, say, Canada or Singapore over whether to embark on an IPv6 deployment exercise in 2016? Can we identify where industry influence originates? Perhaps the question to ask ourselves about the underlying drivers in investing in an IPv6-capable infrastructure is: Who defines what is “normal†in the access industry and who change this norm?

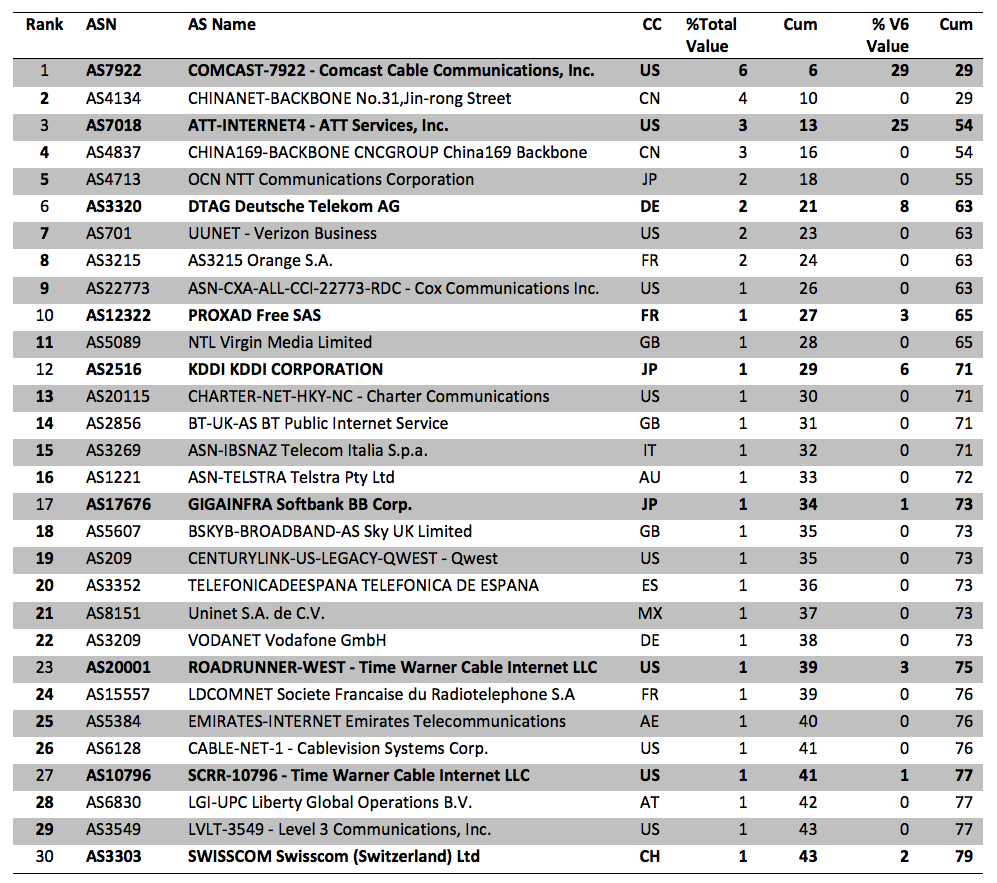

If we use an economic approach to this question, then one possible answer is that the most valuable actors in the industry define the norms of the industry for all other actors. According to this approach, it’s monetary value that drives influence. How can we measure the inherent value of an Internet access service provider? Don’t forget it’s not an absolute quantification of “value†we are after here, but relative rankings. How can we compare the economic value of two access service providers? One aspect of a potential answer lies in the headcount of customers. “Large” access providers are large because of the number of customers. Intuitively, the number of customers equates to size of an access network which has a direct bearing on the value of the access business. But there is another dimension to this value comparison, namely the value of the customers served by the network. One way to approximate this value of the divide the GDP of a country by the population of the country, to generate a GDP per capita metric. If we multiply an estimate of the number of customers of each access network by the GDP per capita of the country where those users are located then we have a way to rank networks by this concept of “network value”.

The most valuable 30 networks by this metric are shown in Figure 6.

Figure 6 – Top 30 Access Providers by “network valueâ€

This is an interesting table in a number of ways. The first is the extent of aggregation in the access business. Just 30 access providers control some 43% of the total value of the Internet’s access business. The second observation is that almost one third of these access providers are actively deploying IPv6. And finally, these nine IPv6-enabled access providers account for almost 80% of the total IPv6 value.

So who is deploying IPv6? The specialized technically adroit enthusiast ISPs, or the largest mainstream ISPs on the Internet? Predominately it’s the latter that’s now driving IPv6 deployment. And that’s a new development.

For many years what we heard from the access provider sector was that they were unwilling to deploy IPv6 by themselves. They understood the network effect and were waiting to move on IPv6 when everyone else was also moving. They wanted to move altogether and were willing to wait until that could happen. But that was then and this is now. I would be interested to hear what today’s excuse for inaction is from the same large scale access providers. Are they still waiting? If so, then whom are they using as their signal for action? If you were waiting for the world’s largest ISP by value, then Comcast has already taken the decision and has almost one half of their customer base responding on IPv6. Similarly if you were waiting for Europe’s largest ISP, then Deutsche Telkom has already embarked on its IPv6 deployment program. Overall, some 8% of the value of the Internet by this metric has now shifted to dual stack mode through their deployment of IPv6, and if just these nine IPv6-cxapable service providers were to fully convert their entire customer base to dual stack they would account for 16% of the total value of the Internet.

I’d like to think that the waiting is now over. I’d like to think that the balance of influence in the network is now shifting to a norm of services that embraces IPv6 in a dual stack service model.

We’ll keep measuring this in the coming months and keep you informed.

Meanwhile the reports of IPv6 deployment on a country by country basis, and further to the level of detail of individual network’s progress with IPv6 is updated daily at http://stats.labs.apnic.net/ipv6.