At APNIC Labs we publish a number of measurements of the deployment of various technologies that are being adopted on the Internet. Here we will look at how we measure the adoption of DNSSEC validation.

DNSSEC

Security for the DNS has been a vexed topic for many years. The days of a trusting Internet where name resolution transactions are carried across the Internet in an open unencrypted manner should be long over. The situation where clients are in no position to verify the authenticity of the server that they are communicating with, nor being able to directly verify the authenticity of the DNS responses that they receive should not be a feature of today’s DNS. Yet we still see many forms of interference in the operation of the DNS name resolution protocol. Some of this DNS interference is institutionalised in the form of national censorship or court-ordered measures, intending to prevent users from accessing certain network resources. Other cases are related to malware and cyber-attacks intended to deceive user applications to direct their traffic to incorrect destinations. It seems to be an impossible task to enforce a halt to all forms of interference in the DNS. But perhaps it is possible to settle for a lesser objective, where a client is able to assure itself of the authenticity and currency of a DNS response, and reject all DNS responses that fail such tests of authenticity.

DNSSEC is intended to achieve such an outcome. DNSSEC adds a digital signature to DNS resource records, allowing a client to determine the authenticity and currency of a DNS answer, if they so choose. You would think that at this point in time, with a widespread appreciation of just how horrendously toxic the Internet really is, anything that allows a user to validate the authenticity of the response that they receive from a DNS query would be seen as a huge step forward, and we should all be clamouring to use it. Yet the extent of take up of DNSSEC is an active question where there is no clear answer. In some areas there is visible movement and visible signs of increasing adoption, while in other areas the response is less than enthusiastic. Many operators of recursive DNS resolvers, particularly in the ISP sector, are reluctant to add the resolution steps to request digital signatures of DNS records and validate them, and very, very few DNS stub resolvers on users’ devices at the edge of the network have similar DNSSEC validation functionality. Over on the signing side, the uptake of adding DNSSEC signatures to DNS zones is, well, variable.

DNSSEC has two parts. The first is the attachment of digital signatures to DNS records, so that each DNS response can be validated by a DNS resolver. The second is the validation of these digital signatures by a DNS resolver. There is no real point in incurring the additional overheads in signing DNS responses if no one is validating these signed responses, and equally there is no point in equipping DNS client to validate signed DNS responses if no one is signing these responses in the first place.

Measuring the first part of the DNSSEC question is challenging. It’s such a simple question: “What fraction of the entire DNS name space has been signed with DNSSEC?” The question assumes two capabilities, namely that we have some idea as to the overall count of DNS names, and secondly, that we can determine the count of signed names. An exact count of all domain names on the Internet is a practical impossibility these days. I guess that it would be possible in theory if every DNS zone administrator allowed full zone transfers, and every zone was able to be fully enumerated. In such a world, a DNS crawler could start at the root zone and follow all the zone delegation records and integrate across the entire DNS name hierarchy. However, much of the DNS is deliberately occluded, and such an approach of top-down crawling is just not viable, even if all DNS zones were enumerable, which is increasingly not the case. There have been a number of measurement exercises that refine this question to something a little more tractable. An overview of these approaches can be found in a recent article on measuring the use of DNSSEC by query profiling.

Answering the second part of the question is what we’ll focus on here, as this is where APNIC Labs has made an important contribution.

Measuring DNSSEC Validation

The question we’d like to answer is: “What proportion of users use DNS resolvers that perform validation of DNS responses?” This is a question that is more easily answered by using a negative formulation of the question: “What proportion of users will be unable to resolve a DNS name if the name is signed with an invalid DNSSEC signature?”

The reason why this is an equivalent question is that when a DNSSEC signature cannot be validated by a resolver, then the resolver will withhold the DNS response and return an error code instead. That means that when a validating DNS resolver is presented with an invalid DNS signature then the resolver will not return the DNS response. The DNS error that is returned is SERVFAIL, and the conventional action by a client resolver when it receives this error is to try the same query with the next resolver in its resolver list. If the client does not receive a response to its query to resolve this invalidly-signed DNS name than it means that all the locally-configured recursive resolvers are performing DNSSEC validation.

Obviously, if any of these recursive resolvers are not performing DNSSEC validation then the client will receive a response to the query.

We cannot directly query the DNSSEC capabilities of each DNS resolver, nor can we query each DNS resolver for the count of users who pass queries to it. But we can perform an equivalent measurement by a large-scale sampling measurement.

Measurement by Advertisements

APNIC Labs uses online advertisements to perform such measurements. The advertisement material includes a script component which is executed by the user’s browser when the ad is impressed. The scription capabilities are highly limited in the context of ads as a mitigation measure against malware distribution, but under certain circumstances ad scripts permit the retrieval of URLs. A URL requires resolution of a DNS name and then an HTTP operation to fetch the identified resource.

The DNS name used in these ads is unique, in that each measurement test in each impressed ad uses a different DNS label. This is to remove the interference from caches in the operation of the measurement.

The measurement system is configured to present between 15M to 20M ad impressions per day. The ad impression pattern across the Internet is not uniform, so we use additional data from the UN Statistics Division and the ITU-T to relate the number of ad impressions per country per day to the Internet user count per country per day. The per-country ad data is weighted by the relative user count per country to adjust for this implicit ad presentation bias.

We cannot instrument the user’s browser, so we set up a known set of URL fetches to be performed by the client and configure the URLS such that the text client has to interact, either directly or indirectly with DNS and web servers that we operate. Then we can infer the behaviour of the test client by looking at the queries we see at pour servers. In the case of the DNS the only server that is authoritative for the DNS name is a server operated by APNIC Labs, so by examining the server logs we can determine if a user is attempting to resolve the DNS name into an IP address. For the HTTP object, again the only server that can serve the object is operated by APNIC Labs.

We can infer that a test client is attempting to resolve a DNS name by virtue of the queries for the unique label name being logged at the DNS server. We can tell if the DNS resolution was successful by virtue of the record of the object retrieval at the HTTP server in the server’s logs.

Measuring DNSSEC Validation

How can we tell if a DNS resolver is performing DNSSEC validation if all we can see is the queries made to the authoritative server of the terminal zone?

A validating resolver will need to make additional queries to build a validation path, querying for a sequence of DNSKEY and DS records. In order to avoid DNS caching we need to ensure that the query names for these DNSSEC records are also unique. In this case wildcard entries will not achieve what we need, as the DNSKEY and DS records will be cached by the DNS. To achieve the DNS behaviour we need for this measurement we use a synthetic DNS delegation, according to the following template:

example.com:

unique-query-label NS server

DS key-hash-value

RRSIG DS signature

unique-query-label.example.com:

. DNSKEY key value

RRSIG DNSKEY signature

A ip address

RRSIG A signature

The delegated domain server will be queried for the DNSKEY record, and the parent domain will be queried for the DS record. As the DNS label is unique, caching will not mask these queries. To achieve this, we use a dynamic DNS server implementation based on a DNS library, where the DNS server is authoritative for both parent and child domains.

The structure of the measurement uses two DNS names (and two URLs), a validly signed DNS name and an invalidly signed DNS name.

By using the DNS and HTTP server logs we can assemble a set of criteria to determine if the test client lies behind DNSSEC-validating DNS resolvers:

With two unique DNS names, Test-Valid and Test-Invalid

A test client uses DNSSEC Validation if:

- We record DS and DNSKEY queries for both Test-Valid and Test-Invalid domain names

- The test client retrieves the URL that uses the Test-Valid DNS name

- The test client does not retrieve the URL that uses the Test-Invalid DNS name

A test client partially uses DNSSEC Validation if:

- We record DS and DNSKEY queries for Test-Valid and Test-Invalid domain names

- The test client retrieves the URL that uses the Test-Valid DNS name

- The test client retrieves the URL that uses the Test-Invalid DNS name

Otherwise, the test client does not use DNSSEC validation

We use the IP address of the end client and a geolocation database to locate the test client into a country and add the DNSSEC validation result to the per-country counts. We use the BGP routing table to locate the test client into a network, and add the result to the per-network counts.

DNSSEC Validation Reports

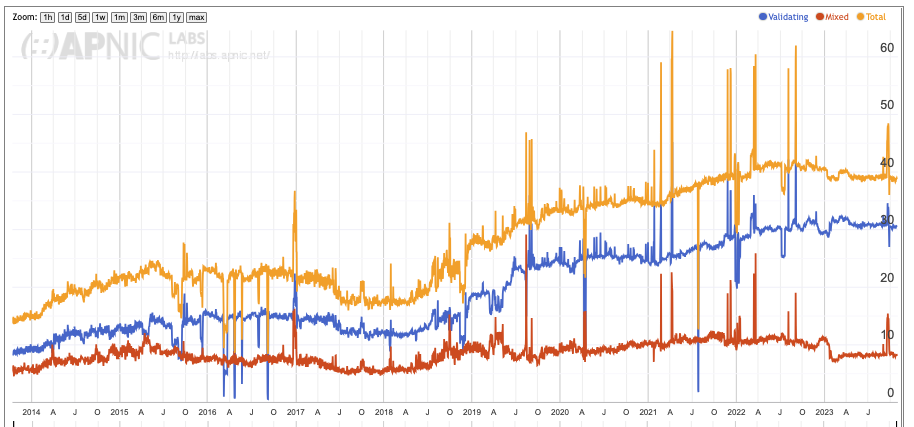

At APNIC Labs we’ve been undertaking this measurement on a daily basis since 2014. The overall results of the adoption of DNSSEC Validation are plotted at https://stats.labs.apnic.net/dnssec/XA.

Figure 1- Internet Total for Uptake of DNSSEC Validation

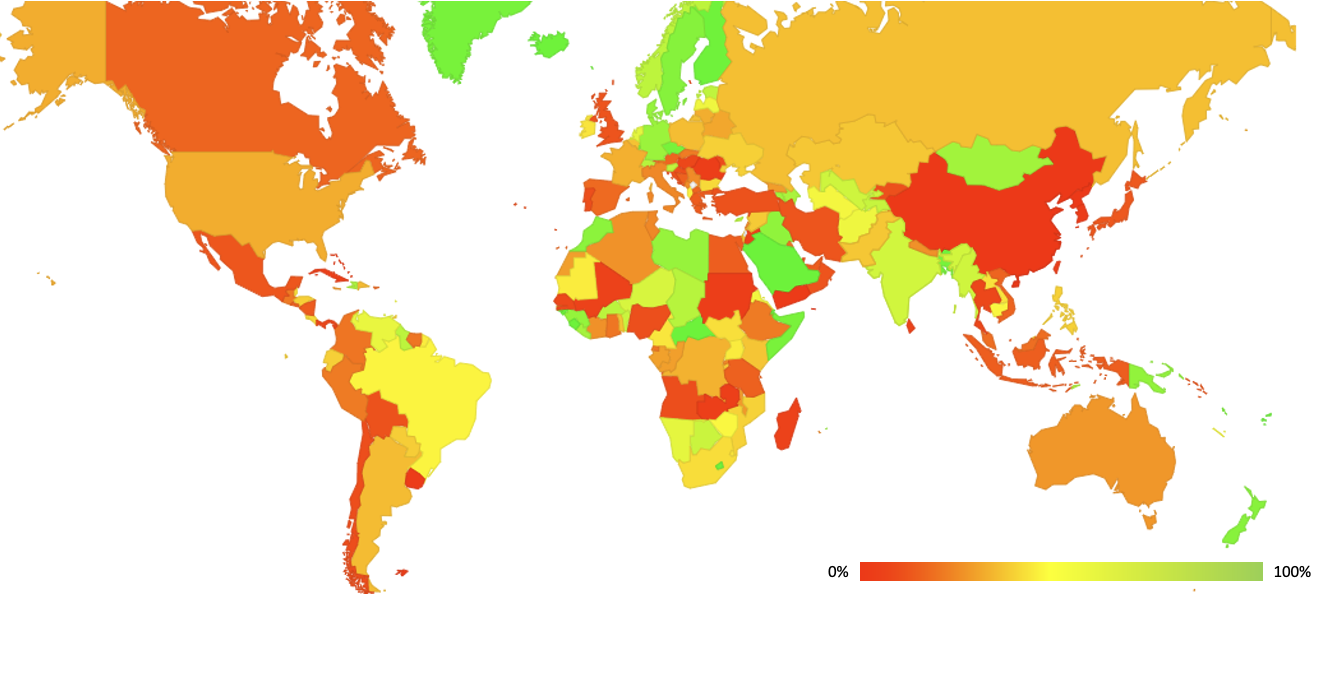

The per-country data is also mapped at https://stats.labs.apnic.net/dnssec. DNSSEC validation rates on a per country basis are highest in parts of Africa and Scandinavia. In other countries with extensive Internet infrastructure, such as the UK, Canada Spain and China, the validation rates are quite low.

Figure 2- Per-Country Totals for Uptake of DNSSEC Validation

The reports at https://stats.labs.apnic.net/dnssec provide an interactive form of navigation that generates time series DNSSEC validation reports down to the level of individual network providers in each country.